Some pieces of code are a source of great pride and some pieces of code are a source of terrible shame. It is due to the nature of game development, I believe, that many of the most interesting pieces of code I write eventually become both. That is certainly the case with the CrossStitch shader assembler, my first significant undertaking in the field of computer graphics.

CrossStitch has been the foundation of Day 1’s graphics libraries for over seven years now, and for almost half of that time it has been a regular source of frustration and embarrassment for me. What I’d like to do is vent my frustration and write an article explaining all the ways in which I went wrong with CrossStitch and why, despite my hatred of it, I continue to put up with it. But before I can do that I need to write this article, a remembrance of where CrossStitch came from and how I once loved it so much.

My first job in the game industry had me implementing the in-game UI for a flight simulator. When that project was canceled, I switched to an adventure game, for which I handled tools and 3dsmax exporter work and later gameplay and graphical scripting language design. A couple years later I found myself in charge of the networking code for an action multiplayer title, and shortly after that I inherited the AI and audio code. Next I came back to scripting languages for a bit and worked with Lua integration, then I spent six months writing a physics engine before becoming the lead on the Xbox port of a PS2 title. I only had a few people reporting to me as platform lead, so my responsibilities ended up being core systems, memory management, file systems, optimization, and pipeline tools.

When I interviewed at Day 1, one of the senior engineers asked me what I thought my greatest weakness was as a game developer and I answered honestly that although I’d worked in almost every area of game development, I’d had little or no exposure to graphics. Imagine my surprise when less than a month after he hired me the studio director came to me and asked if I’d be willing to step into the role of graphics engineer. It turned out that both of Day 1’s previous graphics engineers had left shortly before my arrival, and the company was without anyone to fill the role. I was excited for the challenge and the opportunity to try something new, so I quickly accepted the position and went to work.

This left me in a very strange position, however. I was inheriting the graphics code from MechAssault, so I had a fully working, shippable code base to cut my teeth on, but I was also inheriting a code base with no owner in a discipline for which I had little training, little experience, and no available mentor. The title we were developing was MechAssault 2, and given that it was a second generation sequel to an unexpected hit, there was a lot of pressure to overhaul the code and attempt a major step forward in graphics quality.

The MechAssault 1 graphics engine was first generation Xbox exclusive, which meant fixed-function hardware vertex T&L and fixed-function fragment shading. As I began to familiarize myself with the code base and the hardware capabilities, I realized that in order to implement the sort of features that I envisioned for MechAssault 2, I’d need to convert the engine over to programmable shading. The Xbox had phenomenal vertex shading capability and extremely limited fragment shading capability, so I wanted to do a lot of work in the vertex shader and have the flexibility to pass data to the fragment shader in whatever clever arrangement would allow me to use just a couple instructions to apply whatever per-pixel transformations were necessary to get attractive results on screen.

The problem was that shaders are monolithic single-purpose programs and the entire feature set and design of the graphics engine were reliant on the dynamic, mix-and-match capabilities of the fixed-function architecture. This is where my lack of experience in graphics and my lack of familiarity with the engine became a problem, because although I was feeling held back by it, I didn’t feel comfortable cutting existing features or otherwise compromising the existing code. I wanted the power of programmable shading, but I needed it in the form of a configurable, fixed-function-like pipeline that would be compatible with the architecture I had inherited.

I pondered this dilemma for a few days and eventually a plan for a new programmable shader pipeline started to emerge. I asked the studio director if I could experiment with converting the graphics engine from fixed-function to programmable shaders, and he found two weeks in the schedule for me to try. Our agreement was that in order to claim success at the end of two weeks, I had to have our existing feature set working in programmable shaders and I had to be able to show no decrease in memory or performance.

My plan was to write a compiler and linker for a new type of shader program, called shader fragments. Instead of an object in the game having to specify its entire shader program in a single step, the code path that led to rendering an object would be able to add any number of shader fragments to a list, called a shader chain, and finally the chain would be linked together into a full shader program in time for the object to draw.

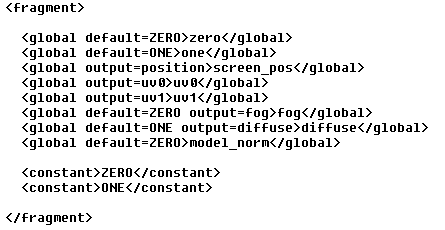

My first task was to define the syntax for shader fragments. I had very limited time so I started with an easily parsable syntax, XML. Fragments consisted of any number of named variables, any number of constant parameters, and a single block of shader assembly code. Named variables were either local or global in scope, and they could specify several options such as default initialization, input source (for reading data from vertex attributes), output name (for passing data to fragment shaders), and read-only or write-only flags.

I wrote a simple C preprocessor, an XML parser, and a compiler to transform my fragments into byte-code. Compiled fragments were linked directly into the executable along with a header file defining the constant parameter names. Rendering of every object began with clearing the current shader chain and adding a fragment that defined some common global variables with default values. For example, diffuse was a global, one-initialized variable available to every shader chain and screen_pos was a global variable mapped to the vertex shader position output. Once the default variables were defined, a mesh might add a new fragment that defined a global variable, vertex_diffuse, with initialization from a vertex attribute and additional global variables for world-space position and normals. Depending on the type of mesh, world-space position might be a rigid transformation of the vertex position, a single or multi-bone skinned transformation of the vertex position, or a procedurally generated position from a height field or particle system.

Common variables were defined for all shader chains. |

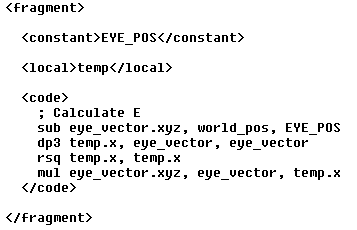

A shader fragment for generating the eye vector. |

With world-space position and normals defined, other systems could then add fragments to accumulate per-vertex lighting into the global diffuse variable and to modulate diffuse by material_diffuse or vertex_diffuse. Systems could also continue to define new variables and outputs, for example per-vertex fog or, in the case of per-pixel lighting, tangent space. Precedence rules also allowed fragments to remap the inputs or outputs or change the default initialization of variables defined in earlier fragments.

The final step in rendering an object was to transform the active shader chain into a shader program. Shader programs were compiled on the fly whenever a new shader chain was encountered, resulting in a small but noticeable frame rate hitch. Luckily, the shader programs were cached to disk between runs so the impact of new shader compilations was minimal. I had promised to add no additional CPU or GPU cost to rendering, so I put a lot of care into the code for adding shader fragments to shader chains and for looking up the corresponding shader programs.

It was a nerve-racking few weeks for me, however, because it wasn’t until I’d implemented the entire system and replaced every fixed-function feature supported by the engine with equivalent shader fragments that I was able to do side-by-side performance comparisons. Amazingly, the CPU costs ended up being nearly indistinguishable. Setting state through the fixed-function calls or setting shader constants and shader fragments was so close in performance that victory by even a narrow margin couldn’t be determined.

The results on the GPU were equally interesting. The vertex shader implementation outperformed the fixed-function equivalent for everything except lighting. Simple materials with little or no lighting averaged 10 – 20% faster in vertex shader form. As the number of lights affecting a material increased, however, the margin dropped until the fixed-function implementation took the lead. Our most complex lighting arrangement, a material with ambient, directional, and 3 point lights, was 25% slower on the GPU when implemented in a vertex shader. With the mix of materials in our MechAssault levels, the amortized results ended up being about a 5% drop in vertex throughput. This wasn’t a big concern for me, however, because we weren’t even close to being vertex bound on the Xbox, and there were several changes I wanted to make to the lighting model that weren’t possible in fixed-function but would shave a few instructions off the light shaders.

The studio director was pleased with the results, so I checked in my code and overnight the game went from purely fixed-function graphics to purely programmable shaders. The shader fragment system, which I named CrossStitch, opened up the engine to a huge variety of GPU-based effects, and the feature set of the engine grew rapidly to become completely dependent on shader fragments. By the time we shipped MechAssault 2, our rendering was composed of over 100 unique shader fragments. These fragments represented billions of possible shader program permutations, though thankfully only a little more than 500 were present in our final content.

Here are some examples of features in MechAssault 2 that were made possible by shader fragments:

- a new particle effects system which offloaded some of the simulation costs to the GPU

- procedural vertex animation for waving flags and twisting foliage

- a space-warp effect attached to certain explosions and projectiles that deformed the geometry of nearby objects

- mesh bloating and stretching modifiers for overlay shields and motion blur effects

- GPU-based shadow volume extrusion

- per-vertex and per-pixel lighting with numerous light types and variable numbers of lights

- GPU-based procedural UV generation for projected textures, gobo lights, and decals

- content-dependent mesh compression with GPU-based decompression

By the end of MechAssault 2 I was convinced CrossStitch was the only way to program graphics. I absolutely loved the dynamic shader assembly model I’d implemented, and I never had a second’s regret over it. It was fast, memory efficient, and allowed me to think about graphics in the way I found most natural, as a powerful configurable pipeline of discrete transformations. If only the story could end there.

In the future I’ll have to write a follow up to this article describing how CrossStitch evolved after MechAssault 2. I’ll have to explain how it grew in sophistication and capability to keep pace with the advances in 3-D hardware, to support high-level language syntax and to abstract away the increasing number of programmable hardware stages. I’ll have to explain why the path it followed seemed like such a good one, offering the seduction of fabulous visuals at minimal runtime cost. But most importantly, I’ll have to explain how far along that path I was before I realized it was headed in a direction I didn’t want to be going, a direction completely divergent with the rest of the industry.

Completely fascinating, I’m excited for the next installment!

Yes, more please 🙂

Great write-up, I agree — look forward to the next installment. Very much enjoying the blog, and glad you’re taking the time to put together well written posts with interesting details.

Excellent read, as usual. Looking forward to hearing what happens next…

I rarely comment, however i did a few searching and wound up here CrossStitch: A Configurable Pipeline for

Programmable Shading – Game Angst. And I actually do have

2 questions for you if you usually do not mind. Could it be only me or does

it look like some of these responses look like they are written by brain dead people?

😛 And, if you are posting at other sites, I’d like to keep up with everything fresh you have to post. Would you make a list of every one of your public pages like your linkedin profile, Facebook page or twitter feed?

I find it is difficult to look clever in blog comments. 🙂

To answer your other question, I don’t have much of a public presence beyond this blog. You should be able to find me on Linked In (Adrian Stone at Day 1 Studios, now Wargaming West) but my profile there is also minimal. Even this blog has suffered recently because my company has been experiencing some upheaval.

I hope to be presenting at some conferences in the near future. If that happens, I’ll be sure to advertise the fact on this blog.