In my last article, I described the first generation of CrossStitch, the shader assembly system used in MechAssault 2. Today I’m going to write about the second generation of CrossStitch, the one used in Fracture.

Development on CrossStitch 2.0 began with the development of Day 1’s Despair Engine. This was right at the end of MechAssault 2, when the Xbox 360 was still an Apple G5 and the Cell processor was going to be powering everything from super computers to kitchen appliances in a few years. It is hard to believe looking back, but at that time we were still debating whether to adopt a high-level shading language for graphics. There were respected voices on the platform side insisting that the performance advantage of writing shaders in assembly language would justify the additional effort. Thankfully I sided with the HLSL proponents, but that left me with the difficult decision of what to do about CrossStitch.

CrossStitch was a relatively simple system targeting a single, very constrained platform. HLSL introduced multiple target profiles, generic shader outputs, and literal constants, not to mention a significantly more complex and powerful language syntax. Adding to that, Despair Engine was intended to be cross-platform, and we didn’t even have specs on some of the platforms we were promising to support. Because of this, we considered the possibility of dispensing with dynamic shader linking entirely and adopting a conventional HLSL pipeline, implementing our broad feature set with a mixture of compile-time, static, and dynamic branching. In the end, however, I had enjoyed so much success with the dynamic shader linking architecture of MechAssault 2, I couldn’t bear to accept either the performance cost of runtime branching or the clunky limitations of precomputing all possible shader permutations.

The decision was made: Despair Engine would feature CrossStitch 2.0. I don’t recall how long it took me to write the first version of CrossStitch 2.0. The early days of Despair development are a blur because we were supporting the completion of MechAssault 2 while bootstrapping an entirely new engine on a constantly shifting development platform and work was always proceeding on all fronts. I know that by December of 2004, however, Despair Engine had a functional implementation of dynamic shader linking in HLSL.

CrossStitch 2.0 is similar in design to its predecessor. It features a front-end compiler that transforms shader fragments into an intermediate binary, and a back-end linker that transforms a chain of fragments into a full shader program. The difference, of course, is that now the front-end compiler parses HLSL syntax and the back-end linker generates HLSL programs. Since CrossStitch 1.0 was mostly limited to vertex shaders with fixed output registers, CrossStitch 2.0 introduced a more flexible model for passing data between pipeline stages. Variables can define and be mapped to named input and output channels; and each shader chain requires an input signature from the stage preceding it and generates an output signature for the stage following it.

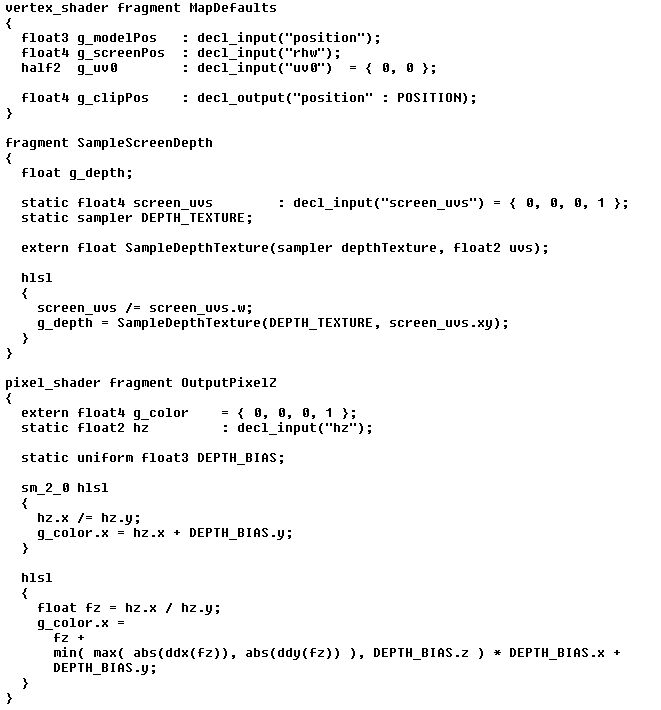

A sampling of early HLSL shader fragments.

CrossStitch’s primary concern is GPU runtime efficiency, so it is nice that shaders are compiled with full knowledge of the data they’ll be receiving either from vertex buffers or interpolators. If, for example, some meshes include per-vertex color and some don’t, the same series of shader fragments will generate separate programs optimized for each case. It turns out that this explicit binding of shader programs to attributes and interpolators is a common requirement of graphics hardware, and making the binding explicit in CrossStitch allows for some handy optimizations on fixed consoles.

The early results from CrossStitch 2.0 were extremely positive. The HLSL syntax was a nice break from assembly, and the dynamic fragment system allowed me to quickly and easily experiment with a wide range of options as our rendering pipeline matured. Just as had happened with MechAssault 2, the feature set of Despair expanded rapidly to become heavily reliant on the capabilities of CrossStitch. The relationship proved circular too. Just as CrossStitch facilitated a growth in Despair’s features, Despair’s features demanded a growth in CrossStitch’s capabilities.

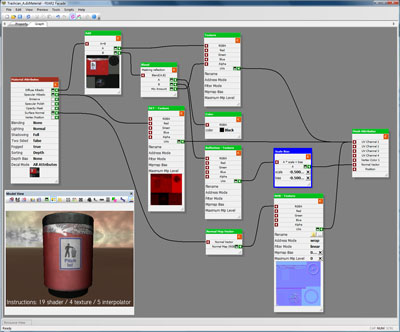

The biggest example of this is Despair’s material editor, Façade. Façade is a graph-based editor that allows content creators to design extremely complex and flexible materials for every asset. The materials are presented as a single pipeline flow, taking generic mesh input attributes and transforming them through a series of operations into a common set of material output attributes. To implement Façade, I both harnessed and extended the power of CrossStitch. Every core node in a Facade material graph is a shader fragment. I added reflection support to the CrossStich compiler, so adding a new type of node to Façade is as simple as creating a new shader fragment and annotating its public-facing variables. Since CrossStitch abstracts away many of the differences between pipeline stages, Façade material graphs don’t differentiate between per-vertex and per-pixel operations. The flow of data between pipeline stages is managed automatically depending on the requirements of the graph.

Façade Material Editor

It was about 6 months after the introduction of Façade when the first cracks in CrossStitch began to appear. The problem was shader compilation times. On MechAssault 2 we measured shader compilation times in microseconds. Loading a brand new level with no cached shader programs in MechAssault 2 might cause a half-second hitch as a hundred new shaders were compiled. If a few new shaders were encountered during actual play, a couple of extra milliseconds in a frame didn’t impact the designers’ ability to evaluate their work. Our initial HLSL shaders were probably a hundred times slower to compile than that on a high-end branch-friendly PC. By the end of 2005 we had moved to proper Xbox 360 development kits and our artists had mastered designing complex effects in Façade. Single shaders were now taking as long as several seconds to compile, and virtually every asset represented a half-dozen unique shaders.

The unexpected 4-5 decimal order of magnitude increase in shader compilation times proved disastrous. CrossStitch was supposed to allow the gameplay programmers, artists, and designers to remain blissfully ignorant of how the graphics feature set was implemented. Now, all of a sudden, everyone on the team was aware of the cost of shader compilation. The pause for shader compilation was long enough that it could easily be mistaken for a crash, and, since it was done entirely on the fly, on-screen notification of the event couldn’t be given until after it was complete. Attempts to make shader compilation asynchronous weren’t very successful because at best objects would pop in seconds after they were supposed to be visible and at worst a subset of the passes in a multipass process would be skipped resulting in unpredictable graphical artifacts. Making matters worse, the long delays at level load were followed by massive hitches as new shaders were encountered during play. It seemed like no matter how many times a designer played a level, new combinations of lighting and effects would be encountered and repeated second-long frame rate hitches would make evaluating the gameplay impossible.

Something had to be done and fast.

Simple optimization was never an option, because almost the entire cost of compilation was in the HLSL compiler itself. Instead I focused my efforts on the CrossStitch shader cache. The local cache was made smarter and more efficient, and extended so that multiple caches could be processed simultaneously. That allowed the QA staff to start checking in their shader caches, which meant tested assets came bundled with all their requisite shaders. Of course content creators frequently work with untested assets, so there was still a lot of unnecessary redundant shader compilation going on.

To further improve things we introduced a network shader cache. Shaders were still compiled on-target, but when a missing shader was encountered it would be fetched from a network server before being compiled locally. Clients updated servers with newly compiled shaders, and since Day 1 has multiple offices and supports distributed development, multiple servers had to be smart enough to act as proxies for one another.

With improvements to the shader cache, life with dynamic, on-the-fly shader compilation was tolerable but not great. The caching system has only had a few bugs in its lifetime, but it is far more complicated than you might expect and only really understood by a couple of people. Consequently, a sort of mythology has developed around the shader cache. Just as programmers will suggest a full rebuild to one another as a possible solution to an inexplicable code bug, content creators and testers can be heard asking each other, “have you tried deleting your shader cache?”

At the same time as I was making improvements to the shader cache, I was also working towards the goal of having all shaders needed for an asset compiled at the time the asset was loaded. I figured compiling shaders at load time would solve the in-game hitching problem and it also seemed like a necessary step towards my eventual goal of moving shader compilation offline. Unfortunately, doing that without fundamentally changing the nature and usage of CrossStitch was equivalent to solving the halting problem. CrossStitch exposes literally billions of possible shader programs to the content, taking advantage of the fact that only a small fraction of those will actually be used. Which fraction, however, is determined by a mind-bending, platform-specific tangle of artist content, lua script, and C++ code.

I remember feeling pretty pleased with myself at the end of MechAssault 2 when I learned that Far Cry required a 430 megabyte shader cache compared to MA2’s svelte 500 kilobyte cache. That satisfaction evaporated pretty quickly during the man-weeks I spent tracking down unpredicted shader combinations in Fracture.

Even so, by the time we entered full production on Fracture, shader compilation was about as good as it was ever going to get. A nightly build process loaded every production level and generated a fresh cache. The build process updated the network shader cache in addition to updating the shader cache distributed with resources, so the team had a nearly perfect cache to start each day with.

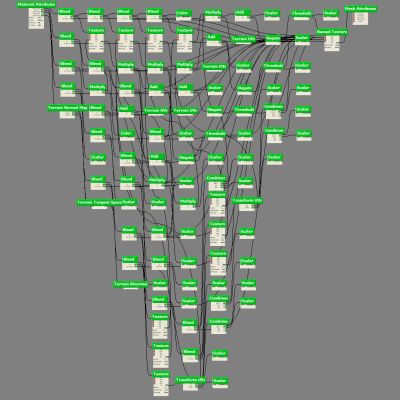

As if the time costs of shader compilation weren’t enough, CrossStitch suffered from an even worse problem on the Xbox 360. Fracture’s terrain system implemented a splatting system that composited multiple Facade materials into a localized über material, and then decomposed the über material into multiple passes according the register, sampler, and instruction limits of the target profile. The result was some truly insane shader programs.

The procedurally generated material for one pass of a terrain tile in Fracture.

A few Fracture terrain shaders took over 30 seconds to compile and consumed over 160 megabytes of memory in the process. Since the Xbox 360 development kits have no spare memory, this posed a major problem. There were times when the content creators would generate a shader that could not be compiled on target without running out of memory and crashing. It has only happened three times in five years, but we’ve actually had to run the game in a special, minimal memory mode in order to free up enough memory to compile a necessary shader for a particularly complex piece of content. Once the shader is present in the network cache, the offending content can be checked in and the rest of the team is none the wiser.

Such things are not unusual in game development, but it still kills me to be responsible for such a god-awful hack of a process.

And yet, CrossStitch continues to earn its keep. Having our own compiler bridging the gap between our shader code and the platform compiler has proved to be a very powerful thing. When we added support for the Playstation 3, Chris modified the CrossStitch back-end to compensate for little differences in the Cg compiler. When I began to worry that some of our shaders were interpolator bound on the Xbox 360, the CrossStitch back-end was modified to perform automatic interpolator packing. When I added support for Direct3D 10 and several texture formats went missing, CrossStitch allowed me to emulate the missing texture formats in late-bound fragments. There doesn’t seem to be a problem CrossStitch can’t solve, except, of course, for the staggering inefficiency of its on-target, on-the-fly compilation.

For our next project I’m going to remove CrossStitch from Despair. I’m going to do it with a scalpel if possible, but I’ll do it with a chainsaw if necessary. I’m nervous about it, because despite my angst and my disillusionment with dynamic shader compilation, Day 1’s artists are almost universally fans of the Despair renderer. They see Façade and the other elements of Despair graphics as a powerful and flexible package that lets them flex their artistic muscles. I can’t take that away from them, but I also can’t bear to write another line of code to work around the costs of on-the-fly shader compilation.

It is clear to me now what I didn’t want to accept five years ago. Everyone who has a say in it sees the evolution of GPU programs paralleling the evolution of CPU programs: code is static and data is dynamic. CrossStitch has had a good run, but fighting the prevailing trends is never a happy enterprise. Frameworks like DirectX Effects and CgFx have become far more full-featured and production-ready than I expected, and I’m reasonably confident I can find a way to map the majority of Despair’s graphics features onto them. Whatever I come up with, it will draw a clear line between the engine and its shaders and ensure that shaders can be compiled wherever and whenever future platforms demand.

Excellent read! And the screenshot of the terrain material is just mind-blowing.

Why didn’t you go with an offline shader compiler and load the resultant shader binary in the game? This would’ve prevented the need for the xbox to do the shader compilation (and the 160Mbs). I must be missing something about how facade worked.

The problem is that Facade materials don’t define whole shader programs in isolation. They define lists of shader fragments which are only a part of the full list of fragments that are used to generate full programs. The number of shader programs that could be generated from a single Facade material is dependent on the rest of the feature set of the engine. Based on other content or script, any number of additional shader fragments may be appended (or prepended) to the material’s shader chain before it is transformed into a full program for rendering.

Wonderful read, thanks a lot!

We did not go as far as having flexible shader fragment networks, but the plain old HLSL uber-shader approach gives comparable amount of headache when it comes to the combinatorial explosion of permutations and their compilation time.

Offline compilation did not really work, as the material description only was not enough – the final permutations were also platform, graphics settings and environment dependent.

“Harvesting” the caches by QA did not work either, due to the human factor (it’s hard to rely upon anything demanding manual, tedious job over and over again) and the fact that shader code in our case would change quite often even after the game is shipped.

Conservative “warming-up” during the level loading was not a good option either, as it would still be too speculative and generate excessive amounts of permutations, blowing up the memory usage and loading times.

We ended up with the asynchronous compilation approach, which worked more or less fine in our case.

Shader cache miss would end up in rendering with a “fallback” material while the main material is queued for the asynchronous compilation… the idea is simple, but of course sorting out the details was quite a pain.

You didn’t like generating the permutations offline at compile time? That’s not encouraging. I’ve been assuming that everyone who went that direction was happy with the results. I can’t bear the thought of converting our entire engine over to offline compilation and then discovering it has as many problems as compiling on the fly!

What’s the difference between runtime and offline compilation? In theory, anything you can do at runtime, you can just as easily do offline. In practice, it sounds like your runtime shader system has too many variables, such that there are simply too many permutations to reasonably generate offline. What if you took CrossStitch 2.0, and cut/modified features until you could generate the entire spectrum of shaders offline. How would this be different from a hypothetical CgFX based framework?

With CrossStich 2.0, I’m also curious if your shipping game was able to do runtime shader generation, or if you just shipped the shader cache. I hear horror stories from some of my coworkers about a previous job, where the testers had to play the entire game, attempting to view every piece of geometry from every possible angle in an attempt to fully populate their dynamic shader cache. I’m sure even the shipped product was missing a permutation or 2 if the player managed to position themselves just right.

You’re certainly right that the heart of the problem is that we have too many variables to compile all permutations offline. One simple example is that Facade materials don’t include any indication as to how they’re going to be used. So while a particular material may only ever be applied to a rigid mesh, in theory the same material could be used as a decal, on a particle, or any number of other ways. One of the steps in eventually replacing CrossStitch has been narrowing the number of variables.

However, there is another factor that separates offline and runtime compilation. Since CrossStitch shaders are pieced together at runtime, the “data” that defines whole shaders is partially platform-dependent C++ code. To write an offline compiler, parts of our renderer would either have to be duplicated in a PC tool (and then kept in sync) or factored out in such a way that the same code could run on PC and (for example) Playstation 3 without additional cost.

This is an awesome post that lets us see the other side of the shader compilation fence without having to actually invest the resources in it. Thanks Adrian.

> adding a new type of node to Façade is as simple as creating a new shader fragment and

> annotating its public-facing variables.

How did you handle preshaders (pulling out and evaluating uniform expressions on the CPU) then? That’s an important optimization in my experience.

> the plain old HLSL uber-shader approach gives comparable amount of headache when it comes

> to the combinatorial explosion of permutations and their compilation time.

I think the compile time of permutations is a solveable problem with some work. You can parallelize and even distribute shader compilation, speeding up shader compiling by orders of magnitude.

One of the big disadvantages of offline shader compiling is the memory required to store all the permutations that get generated. It helps to compress all the shaders in chunks and only decompress as needed, but it will still be a significant amount of memory (usually 10-15mb on 360).

I confess to not having spent a lot of time investigating preshaders. I’m careful about arranging the constant inputs for costly shaders like post-processing filters, and I’ve manually compared the value of preshaders in those cases without positive results; but for the vast majority of our shaders that are more fully data-driven, I have no idea how much perf we’re leaving on the table by not taking advantage of preshaders.

I’ve been working on a personal project for the Xbox 360 (through unconventional means) and I’ve noticed a problem. I want to implement deferred shading but, that means I have to use predicated tiling and that appears to be a problem; the predicated tiling sample supplied with the Xbox Development Kit causes the console reset (and have trouble starting up again). Do you have any idea why this happens?

I can’t speculate on what might cause the problem you’re seeing, but I can tell you that I’ve successfully used predicated tiling on the Xbox 360. Some people have also had success manually implementing tiling. A simple implementation will not be competitive with the XDK-supported predicated tiling, but it opens up the possibility of more high-level optimizations and can improve command buffer utilization and reduce latency.

I might be missing something, but wouldn’t it be possible to generate all needed shader permutations from scripts provided? Basically doing the same thing offline.

Not in an automated or general way. Knowing what shaders a script might create is equivalent to knowing what output a program might produce for an unknown set of inputs, and that’s a problem that simply isn’t solvable.