In my last article I promised a follow-up description of the memory allocation system that I wrote for Despair Engine during the development of F.E.A.R. 3. Before I get to that, however, I’ve been inspired by Charles Bloom‘s recent posts on the job queue system that is apparently part of RAD Game Tools‘ Oodle, and I thought it might be interesting to describe the current job system and thread layout in Despair.

The “concurrency manager” in Despair Engine consists of worker threads and job queues. By default there is one pool of worker threads that share a single job queue, referred to as public workers, and some number of additional worker threads, each with its own job queue, referred to as private workers.

The job queues and their worker threads are relatively simple constructs. Jobs can be added to a queue from any thread and, when jobs are added, they create futures which can be used to wait on the job’s completion. A job queue doesn’t directly support any concept of dependencies between jobs or affinities to limit which worker threads a job can run on.

Jobs are fetched by worker threads in strictly FIFO order, but with multiple worker threads servicing a single queue, that doesn’t provide many guarantees on the order in which jobs are processed. For example, jobs A and B, enqueued in that order, may be fetched consecutively by worker threads 1 and 2. Because these tasks are asynchronous, worker thread 2 might actually manage to start and finish processing job B before worker thread 1 has done any meaningful work on job A.

What this means in practice is that any job added to the public worker queue must be able to be processed on any public worker thread and concurrently with any other job in the queue. If a job really needs to enforce a dependency with other jobs, it can do so by either waiting on the futures of the jobs it is dependent on or by creating the jobs that are dependent on it at the end of its execution. Combinations of these techniques can be used to create almost arbitrarily complex job behavior, but such job interactions inhibit maximum parallelism so we try to avoid them in our engine.

Public worker threads are mostly used by data-parallel systems that divide their work into some multiple of the number of public workers, enqueue jobs for each worker, and then, at some later point in the frame, wait on all the jobs’ futures. Examples of systems like this are animation, cloth simulation, physics, effects, and rendering. Although all of the users of the public job queue try to provide as much time as possible between adding work to the queue and blocking on its completion, work in the public job queue is considered high-priority and latency intolerant.

Systems that have work that can be processed asynchronously, but doesn’t meet the requirements of the public job queue, create private job queues. The primary reason for using a private job queue is that the work is long but latency tolerant, and we don’t want it delaying the latency intolerant work in the public job queue.

In Fracture, terrain deformation was processed in a private worker thread, consuming as much as 15 ms a frame but at low priority.

Terrain deformation in Fracture

Starting with F.E.A.R. 3, decals have used a private worker thread. The decal thread generates and clips geometry from a queue. Although the queue might hold several hundred milliseconds of work, it will happily take only what time slices it can get from a single thread until it finishes its backlog.

We also use private worker threads for systems that have restrictions on their degree of parallelism or that perform primarily blocking work. Save data serialization, background resource loading, audio processing, network transport, and, on the PC, communication with the primary Direct3D context fall into this category.

I’ve had occasion over the years to use the Despair concurrency manager for a wide variety of tasks with a wide range of requirements, and I have very few complaints. I find the system simple and intuitive, and it is usually immediately obvious, given a new task, whether a private or public job queue is appropriate. I’ve occasionally wished for a richer set of scheduling options within the job queues themselves, but I ultimately believe that complex scheduling requirements are a symptom of bad multithreaded design and that if complex scheduling truly is justified, it is better handled within the jobs themselves.

The one area where scheduling has given me a lot of trouble, however, and where I wish we could offer some improvement, is in the interaction of public and private worker threads. When the platform, code, and content are reasonably stable, it isn’t too difficult to arrange the public and private workers such that they share the available processor resources efficiently.

On the Xbox 360, for example, where there are 6 hardware threads available, we have the main thread with affinity to hardware thread 0, four public worker threads with separate affinity to hardware threads 1, 2, 3, and 5, and most of our private worker threads sharing affinity with hardware thread 4. This arrangement ensures that the main thread and the public workers are never interrupted by the private worker threads, and it means that the private workers get a roughly equal share of an entire hardware thread. We know exactly how fast the hardware is and we can predict with a high degree of accuracy how much work each private worker will receive, so we don’t need to worry about oversubscription or underutilization of hardware thread 4.

In cases where the private worker threads aren’t sharing the load in a way we consider optimal, we can tweak either the hardware thread affinity or the software thread priorities to get the behavior we want. For example, in F.E.A.R. 3 we offloaded some marshaling of data for network transport to a private worker thread. Jobs for that thread were generated near the end of each frame and they had to be completed near the beginning of the following frame. If the private workers were left to the OS scheduler, the decal thread might preempt the network thread during that crucial window and cause a stall in the next frame. Since we knew the network thread never generated more than 5-6 ms of single-threaded work, we could safely boost its thread priority and ensure that it was never preempted by decals.

In another case where we weren’t 100% satisfied with the default scheduling of a private worker, we moved the private worker to share a hardware thread with one of the public workers but also lowered its thread priority. The luxury of a fixed, single-process platform is that we can hand tune the thread layout and be confident that our results will match those of our customers.

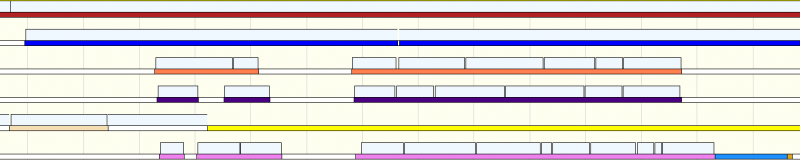

Example hardware thread utilization in F.E.A.R. 3, as captured by PIX on the Xbox 360

In the capture above you can see examples of both the situations I described. The thin colored lines represent software threads and the thick blocks above them represent individual jobs. A high priority audio thread, shown in yellow, interrupts a job on hardware thread 4, but that’s okay because the job being interrupted is latency tolerant. Later in the frame another thread, shown in light blue, schedules nicely with the latency intolerant jobs in the pink public worker on hardware thread 5.

The PC is where things get messy. On the PC we worry most about two different configurations. One is the low end, which for us is a dual core CPU, and the other is the high end, which is a hyper-threaded quad core CPU.

Currently, on the PC we allocate MAX(1, num_logical_processors-2) public worker threads. On a hyper-threaded quad core that means 6 public worker threads and on a dual core that means just 1 public worker thread. Unlike on the Xbox 360, however, we don’t specify explicit processor affinities for our threads, nor do we adjust thread priorities (except for obvious cases like an audio mixer thread). We don’t know what other processes might be running concurrently with our game and, with variations in drivers and platform configurations, we don’t even know what other third-party threads might be running in our process. Constraining the Windows scheduler with affinities and thread priorities will likely lead to poor processor utilization or even thread starvation.

That’s the convention wisdom anyway, but it sure doesn’t look pretty in profiles. From a bird’s eye view the job system appears to work as expected on the PC. As the number of cores increase, the game gets faster. Success! If it weren’t for our internal thread profiler and the Concurrency Visualizer in Visual Studio 2010 we’d probably have been happy with that and moved on.

On high-end PCs things aren’t too bad. Both our job queue visualization and Visual Studio’s thread visualization sometimes show disappointing utilization of our public workers, but that’s not necessarily a problem. We know we’re oversubscribed because we have more software threads created by the engine than there are hardware threads and there are at least 3 other third-party threads in our process doing meaningful work not to mention the other processes in the system. One of the benefits of a thread pool is that the number of participating threads can scale with the environment. Thankfully the behavior we usually see in these cases is fewer public workers tackling more jobs rather than all public workers starting jobs and then some being preempted, which would block the job queue until they can be rescheduled.

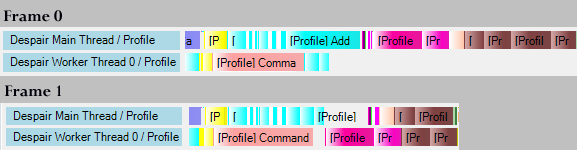

Job visualization from the same portion of two adjacent frames on a dual core PC

The image above is an example from our internal thread profiler. I had to zoom and crop it a bit to fit on the page, but what you’re seeing is the same portion of two frames on a dual core machine. The colored blocks represent jobs. You can see in the first frame a long stretch in which the main thread is processing jobs while the single worker thread is sitting idle. The next frame shows the intended behavior, with both the main thread and the worker thread processing jobs equally. We can’t visualize third-party threads or other processes with our internal profiler, so we have to turn to Visual Studio’s profiler to see what’s preempting our worker in that first frame. In our case it is usually the video driver or audio processor, but really any thread could be at fault. The more active threads in the system, including our own private workers, the more likely this sort of interference becomes.

The other behavior that is a little disappointing on many-core PCs is the high percentage of cross-core context switches. The Windows scheduler prioritizes quite a few factors above keeping a thread on its current core, so it isn’t too big a surprise for threads to jump cores in an oversubscribed system. The cost is some nebulous decrease in CPU cache coherency that is all but impossible to measure. Short of setting explicit processor affinities for our threads, which hurts overall performance, I haven’t had any luck improving this behavior. I had hoped to combat this effect with SetThreadIdealProcessor, but I haven’t actually been able to detect any change in scheduling when calling this function so we don’t use it.

On a high-end PC, as Louis C.K. might say, these are first world problems. With 8 logical processors we can afford to be less than perfect. From my profiles, even the best PC games are barely utilizing 30% of an 8 processor PC, and we’re comfortably within that range so I’m not complaining.

On dual core machines these issues can’t be ignored. With only two hardware threads, we’re now massively oversubscribed. The particularly difficult situation that we have to cope with is when all of our private workers are fully occupied at the same time. As I explained earlier, the decal thread is latency tolerant, but it can buffer far more than a single frame’s worth of work. This means that, left unchallenged, it alone can consume a full core for a full frame. Video drivers usually have their own threads which might, under heavy load, consume 25% of a core, audio might want another 20%, and Steam another 20%. All told we can have two thirds of a core’s worth of work in miscellaneous secondary threads and another full cores’s worth of work in the decal thread. That’s 1.7 cores worth of work competing on a level playing field with the main job queue on a machine with only 2 cores!

For most of these threads we have a general optimization problem more than a concurrency problem. We don’t have much flexibility in when or how they run, we just need to lower their cost. The decal thread, on the other hand, is different. Its purpose is to periodically consume far more work than would normally be budgeted for a single frame and to amortize the cost of that work over multiple frames. If it is impacting the execution of other threads then it isn’t doing its job.

My first reaction to this problem was, as usual, to wish for a more sophisticated scheduler in the public job queue. It seemed as though an easy solution would be to stick decal jobs in the public job queue and to instruct the scheduler to budget some fraction of every second to decal processing while trying to schedule decal jobs only at times when no other jobs are pending. After some consideration, however, I realized that this was asking too much of the scheduler and, perversely, would still require a lot of work in the decal system itself. Since the job scheduler isn’t preemptive, even a powerful system of budgets and priorities would rely on the jobs themselves being of sufficiently small granularity. The decal system would have to break up large jobs to assist the scheduler or, similarly, implement a cooperative yielding strategy that returned control to the scheduler mid-execution.

In addition to assisting the scheduler, the decal system would also have to become aware of how its rate of production was being throttled. Since decal resources are recycled using a heuristic that is heavily LRU, the decal system must manage the rate of input requests to match the rate of production in order to ensure that decals aren’t being recycled as soon as they are created.

It seems that any additional complexity added to the job scheduler is going to require equal complexity to be added to the decal system in order to take advantage of it. That’s always a red flag for me in systems design.

I’m still weighing some options for dealing with the decal thread, but my current favorite is to swallow my fears and seek help from the OS thread scheduler. If we reduce the thread priority of the decal thread under Windows, it will only be given time when the cores would otherwise be idle. However, since Windows implements thread boosts, even in a completely saturated environment the decal thread won’t starve completely. Nevertheless, this is a risky strategy because it creates the ability for the decal thread to block other threads through priority inversion. This probably isn’t a scalable long-term solution, but given our current thread layout and hardware targets, it achieves the desired result.

The difficulty of achieving maximum throughput on multicore processors is something that is often talked about in games, but what is less often talked about is how much harder this is on the PC than on the consoles. Maximizing throughout on high-end PCs is great, but, as I’ve shown, it must be done without sacrificing response time on low-end PCs. With our current approach I’ve been pretty pleased with our progress in this area, but I’m nevertheless having a hard time envisioning a day when we can fully utilize the resources of an 8 processor machine and still continue to provide a compatible play experience on a lowly dual core.

Hopefully by that time we’ll have broad support for GPGPU tasks as well as the scheduling flexibility to rely on them for more of our latency tolerant work.

Apparently (I haven’t tried it myself) you can disable thread boosts in Windows, which should prevent the priority inversion problem if you lower the priority of the decal thread.

http://msdn.microsoft.com/en-us/library/windows/desktop/ms686280(v=vs.85).aspx

The priority inversion problem occurs when the decal thread acquires a lock that the main thread needs. If the decal thread is low enough priority that it never gets scheduled, it will never release the lock and the main thread will deadlock. Priority boost will actually prevent the deadlock, but disabling it, as you suggest, might be a good thing. I’d rather have a clear deadlock that I can detect and fix than an intermittent hitch as the decal thread waits for a priority boost.

Adrian, thanks for the post! I tend to agree that a job queue does not need to have a lot of built in complexity for handling dependencies. Charles Bloom’s solution for “permits” is basically the same as having one job queue a follow-up job. I do, however, have a few questions. I would appreciate your thoughts.

1. As you said, the public queue is for timing-intolerant, ready-to-run tasks. Have you considered any debug mechanisms to help monitor/enforce this behavior? For example, it seems like you could detect the following cases:

1a. A job that was queued but never waited on.

1b. A job that was queued by a private worker thread.

1c. A single job that took “too long” (according to some metric).

1d. A job that remained in the queue for “too long” without anybody waiting on it (for example, something that wasn’t really needed until next frame).

1e. A job that was “too fast” (according to some metric), such that the job queue overhead was significant, with respect to the duration of the job.

2. I would also be interested in your thoughts regarding what the calling thread should do while waiting for a job. Consider the following cases:

2a. The calling thread waits on a job that has not yet started. If the calling thread is the main thread, then it would presumably be better for it to steal the job and start executing it immediately. Thoughts?

2b. The calling thread needs to wait on multiple jobs, some of which have not yet started. It could steal one of those jobs and promote the others to the head of the queue. Thoughts?

2c. The calling thread waits on a job that has already started, and we don’t know how long it will take to complete. The calling thread could yield, but then it might lose some time in waking up (maybe OK for a worker, but probably not OK for the main thread). Or the calling thread could spin. Or, theoretically, it could do some work if there was a way to interrupt that work responsively, when the real job finishes. Thoughts?

3. One commonly used feature of the Source engine’s thread library is a template helper called CParallelProcessor. It takes an array of elements, a size, an execute function, and a thread pool. Internally, it creates some number of jobs, each of which helps to process the elements. The calling thread also participates until there are no more elements to process, and all the jobs have finished (or never started). You could obviously do the same thing by managing separate jobs, but only if the calling thread can still contribute. Either way, you have to deal with the fact that other jobs might already be in the queue. In the worst case, the calling thread will be the only one processing the elements. If the calling thread is the main thread, then that’s a pretty serious problem. One solution would be to add the new jobs to the head of the queue (LIFO) rather than the tail (FIFO). One could even argue that it should preempt other jobs that are already in progress, if there was a reasonable way to do so. Thoughts?

Thanks again! Talk to you soon!

Hi, Nate! Always great to hear from you! To answer your questions:

1) We have a debugging tool that is similar to PIX timing captures on the Xbox and (from what I’ve heard of it) Rad’s Telemetry tool. That’s by far my favorite way to visualize and debug concurrency issues at the job level. We have profile timing events that are also helpful, but nothing beats having a graph with markers indicating when each job was added, when and for how long it ran, and when any thread waited on it.

What we don’t have, and what I think you’re suggesting, is any constant and automated tracking of potential performance problems in the job queue. That’s a good idea and something the we could probably get some benefit from.

2) We currently have two mechanism for a thread to wait on a job future. A thread can wait passively, which means it yields execution entirely until the job is complete, or it can wait actively, which means it participates in executing jobs in the queue until the job it is waiting on completes. The choice to wait actively or passively is only ever made at compile-time right now, based on profiling and specific knowledge of the job structure at the time we need to wait.

The system could certainly be a lot smarter, particularly if it had better dependency information between jobs (allowing it to process jobs out of order) or estimates on the expected duration of each job (allowing it to avoid starting long jobs when the job being waited on is going to finish soon). The truth is, though, that our job behavior isn’t complicated enough to justify adding features. For the most part our job queue users fall into three categories. The first category starts with an empty queue, divides its work into one more jobs than we have public workers, submits a job for each public worker, processes the last job itself, and finally waits passively for all the jobs to complete (leaving an empty queue). The second category submits a bunch of jobs to the public queue and then later in the frame, when it can be virtually certain the jobs it added have completed, it waits passively on the jobs and processes their results. And the last category produces a bunch of jobs of variable count and duration and may have to wait on a subset of the jobs before it can move on or produce more jobs. This last category is the only category which can benefit from more sophisticated wait behavior and it usually performs better with active waiting.

As we move towards greater thread utilization and more interleaving of jobs from different systems, we’ll have to worry more about the interactions between jobs in the queue.

3) We have some helper functions that sound similar to CParallelProcessor. As I described above, although the main thread can participate generically in the job queue, many systems prefer to simply reserve a portion of their distributed work for the main thread and process it directly.

A lot of your comments and questions highlight the problem of picking the best job to work on at each moment, given that not all jobs have equal urgency. It is definitely a hard problem, and one that we struggle with, but for the most part we deal with it by separating the latency tolerant and intolerant jobs into separate queues. If you can accept that every job in the public job queue needs to be finished ASAP, then it doesn’t really matter which one you work on first or whether the main thread is processing jobs from the queue or doing something else.

Adrian, thanks for the response! Part of the difficulty with the Despair Engine’s public job queue is that it is not really reserved for jobs of equal urgency. Consider the following frame order:

1. Animation code adds several jobs

2. Physics code adds several jobs

3. Physics code immediately waits on its jobs

4. Other stuff happens

5. Animation code waits on its jobs.

In this example, I would say that the physics jobs have greater urgency. The problem is that step 3 may need to wait on animation jobs that have not even started yet. My suggestion was to execute such jobs ahead of the not-yet-started animation jobs, in the hope that the animation jobs will still get done before step 5 (such as during step 4).

Obviously, such cases may not arise in practice, and profile data can tell us. As we look to the future, however, we assume that we will need to stress our concurrency systems more and more, and these issues may become more important. Those are my musings for today, at any rate. Thanks again!

Nate

Your point is well taken, but the specific problem you’re describing doesn’t actually exist in our engine. Physics jobs can’t preempt the pre-physics animation jobs because the physical simulation is dependent on the results of those jobs. I can’t think of any cases in our engine right now where two systems are interleaved without an implied dependency.

That said, robust support for varying job priorities is definitely a weakness of our concurrency manager. Having separate software threads barely works for two levels of job priority, but it doesn’t scale to more priority levels. To handle more variation in job priorities, I think we’d have to add direct support for that into the public job queue.