dlmalloc

Dlmalloc is a general-purpose memory allocator developed by Doug Lea since 1987. It is highly optimized, easily portable, and elegantly designed. It functions as a drop-in replacement for malloc and the source has been generously released into the public domain. The code is also an absolute joy to read, with every line conveying volumes of careful intention—optimizations, bug fixes, and refinements thoughtfully integrated over the years. With so many positive attributes to recommend it, it is no wonder dlmalloc has become the gold standard for memory allocators and a popular choice for game developers. It is also really unfortunate, because dlmalloc is a terrible allocator for today’s game consoles.

To understand why this is, you must first have an overview of dlmalloc’s interface and how it works. Dlmalloc manages a pool of address space composed of one or more discrete regions called segments. Dlmalloc’s pool can optionally grow or shrink through user-provided callbacks to allocate new segments and free unused ones. The “grow” callback is triggered when dlmalloc can’t satisfy an allocation request within its currently managed pool of memory and the “shrink” callback is triggered when dlmalloc detects that a segment is no longer occupied by any allocations. The grow callback is typically implemented with OS-level functions that allocate memory such as VirtualAlloc, mmap, or sbrk.

Dlmalloc is a boundary tag allocator at its heart, so segments are treated like new nodes in an intrusive linked list and are written with small headers or footers. The important thing to note about this is that dlmalloc requires that all memory it manages be readable and writable. The grow callback can return virtual memory, but it must already be committed (mapped to physical memory) in order for dlmalloc to function.

Another important characteristic of dlmalloc is that while it can return segments through the use of the shrink callback, it is not very aggressive in doing so. Segments are stored in a linked list and the only way for dlmalloc to coalesce adjacent segments or find empty segments is to perform a linear traversal of that list. For some users segments may be very large in size and very small in number so the linear traversal is cheap, but for most users segment management isn’t worth the cost, and dlmalloc’s default configuration is to only consider the most recently allocated segment when looking for segments to merge or release.

This is significant because it means dlmalloc’s callbacks can’t be used to allocate and commit individual pages of virtual memory. Trying to use dlmalloc with a segment equivalent to a virtual memory page would be prohibitively expensive or pointless depending on the configuration.

So to summarize, dlmalloc has no support for virtual memory.

This may seem like a crippling limitation for such a popular allocator, but in practice it affects very few systems. In fact, if a system meets any of the following requirements, dlmalloc’s blind spot when it comes to virtual memory is almost completely irrelevant.

Demand Paging

Almost all general-purpose computers these days have an operating system that features demand paging. With demand paging, the operating system keeps track of which pages of virtual memory are needed by the system and tries to keep only those pages resident in memory. In other words, dlmalloc doesn’t have to bother with the difficult work of committing and decommitting pages because the OS is already doing a pretty good job of it.

More Physical Memory than Virtual Address Space

In the consumer space, most applications are 32-bit and run on machines with more than 2 gigabytes of memory. This includes the most recent big-budget PC games like Crysis, Battlefield, Call of Duty, and Batman since they all have minimum system requirements of at least 2 gigabytes of main memory. If dlmalloc can commit all of the available address space without penalty, it has no reason not to do it.

No Virtual Memory or Predictable Allocation Pattern

Embedded systems are likely to have no virtual memory or to have very predictable allocation patterns that don’t benefit from virtual memory. The fact that dlmalloc sacrifices virtual memory support and dynamic segment management in favor of speed and simplicity is actually a perk for embedded systems.

Which Brings Us to Modern Consoles

Modern game consoles are unusual in that they don’t match any of the above criteria. Both the Xbox 360 and the Playstation 3 have rich support for virtual memory, but the operating systems on the two consoles offer limited or no support for demand paging. The Xbox 360 has 512 megabytes of physical memory to back its virtual address space and the Playstation 3 has 256 megabytes. Both consoles provide games with significantly more virtual address space than physical memory, so there is a huge potential upside to managing them separately.

Why We Care About Virtual Memory

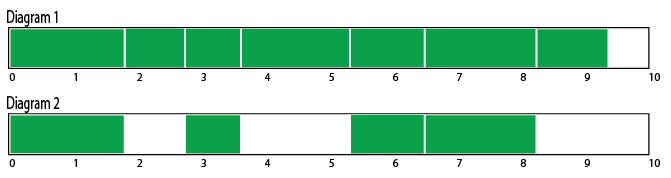

In the absence of demand paging, virtual memory exists for one purpose only—to reduce the cost of fragmentation. Fragmentation occurs in any allocator that manages variable-sized blocks of memory which can be freed in arbitrary order. Consider the diagrams below. The green blocks are allocations in a system with 10 megabytes of memory. In the first diagram, most of the available memory has been allocated in variable-sized amounts. In the second diagram, some of those allocations have been freed. There are more than 4 megabytes of memory available in diagram 2, but even a 2 megabyte allocation will fail because there are no contiguous blocks of memory large enough to place it. This phenomenon is known as fragmentation.

Fragmentation can be a huge problem with any general-purpose allocator. The less predictable an application’s allocation patterns and the larger the range of its allocation sizes, the more susceptible it is to allocation failure due to fragmentation. Most console games are large, complex systems integrating code from multiple sources and trying to satisfy a wide range of allocation patterns. At the same time, the game industry is very competitive, so console games are expected to take maximum advantage of the console hardware’s capabilities. You simply can’t do that without tackling the problem of memory fragmentation.

The most surefire way to avoid memory fragmentation is to only manage memory in fixed-size blocks. If every allocation is the same size, any hole left by one allocation can be filled perfectly by another. Unfortunately requiring that all memory requests be the same size is unreasonable, and trying to do so naively will only result in even more memory waste. This is where virtual memory comes in.

Virtual memory subdivides all physical memory into fixed-size blocks, known as pages, and provides a mechanism for contiguous allocations of virtual memory to span multiple nonadjacent pages. By adding this level of indirection, an application can be given an address the behaves like a block of contiguous memory, but under the hood is made up of separate fixed-size allocations of physical memory. This completely removes the problem of physical memory fragmentation, though it obviously creates the problem of virtual memory fragmentation. If virtual memory is limited to the same size as physical memory, you’re no better off than when you started. However, if virtual memory is allowed to be much larger than physical memory, even though fragmentation still exists, it is much less likely to ever cause an allocation failure.

The reason why virtual memory can be larger than physical memory is that unused pages of virtual memory don’t have to be backed by physical memory. As the name implies, it is virtual resource.

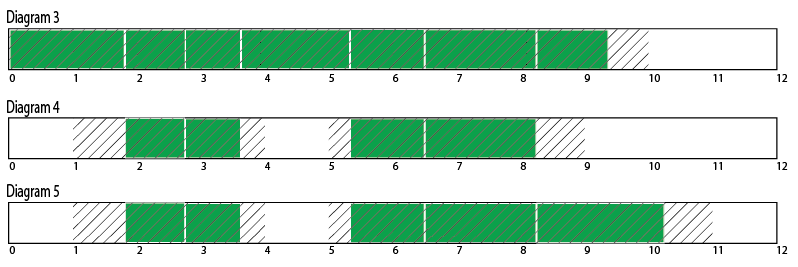

The next set of diagrams shows the exact same sequence of memory allocations as the earlier diagrams, but this time using a slightly larger pool of virtual memory. Allocations in virtual memory are still marked in green and free regions are still marked in white, but now the diagram is divided into 1 megabyte pages and any pages which are backed by physical memory are shaded with diagonal lines. In order for an allocation to succeed, it must fit into a free region of virtual memory that is backed by physical pages, ie. white areas shaded with diagonal lines.

In diagram 3 all 10 megabytes of physical memory are allocated, but there are still more than two megabytes of virtual memory available. A new 2 megabyte allocation could fit in virtual memory, but it will still fail because it can’t be backed by physical memory. In diagram 4 some allocations have been freed, and not only is there still 2 megabytes of contiguous virtual memory available, there are two free 1 megabyte physical memory pages available to back it. Diagram 5 shows the successful addition of a new 2 megabyte allocation, the same allocation that would have failed without virtual memory in diagram 2. If you count the number of physical pages that are allocated in diagram 5, you’ll see that we haven’t exceeded the system limit of 10 megabytes.

Notice that while we’ve succeeded in allocating an additional 2 megabytes despite fragmentation, we still have more than 2 megabytes of virtual memory left and yet we can’t service another 2 megabyte request. Virtual memory itself is now too fragmented. We could increase the amount of virtual memory without penalty to make room for another 2 megabyte allocation, but it doesn’t help because although we have 2 megabytes of physical memory that isn’t being used, we don’t have 2 free physical memory pages to back the new allocation.

For virtual memory to be an effective solution to fragmentation, you need a lot more virtual address space than physical memory and you need as small a page size as possible. On the Xbox 360 the default page size is 64 kilobytes and the on the Playstation 3 it is 1 megabyte, but both consoles support a range of page sizes. The best page size on consoles for compromising between performance and fragmentation is 64 KB, which begs the question, “why does the Playstation 3 default to 1 megabyte?” The answer is simple: the Playstation 3 uses dlmalloc for its general-purpose allocator and since dlmalloc doesn’t take advantage of virtual memory, there is no point in using a smaller page size!

Hopefully by now I’ve convinced you of the value of virtual memory and why dlmalloc isn’t the right choice for console games. In the future I’ll describe the general-purpose memory allocator I wrote for Despair Engine and how I used it to combat fragmentation. As a bonus, I’ll explain the larger memory management framework that I built on top of the allocator to reduce thread contention and to ensure that the entirety of memory is available to a heterogeneous set of processors, despite their non-unified views of virtual memory.

Errr.. can’t you just use something like dlmalloc for small allocations, and VirtualAlloc for large allocations?

(assuming for the moment that the percentage of memory needed for small vs. large allocs is constant)

Also – there are other reasons for using large virtual memory pages at the OS level.

You can, but the partitioning needs to be static because dlmalloc isn’t designed to grow and shrink its pools dynamically. I would argue that once you’ve accepted that limitation, you’re no longer dealing with a general-purpose allocator and furthermore, the “slack” in your small allocation pool and the rounding-to-page-size in your large allocations is internal fragmentation which is at least as costly as the external fragmentation I describe in this article.

Besides address translation performance and consistency between the CPU and GPU, are there other reasons to use larger page sizes?

if you have control over virtual memory you can also create circular buffers by duplicating the pages of the buffer after the buffer. could be useful in some streaming situations …

If I’m understanding you correctly, you’re proposing a many to one mapping between virtual and physical addresses. You could certainly do some interesting things with that, but I don’t think any of our target platforms have a virtual memory API that is quite that flexible.

If you’re on Linux couldn’t you create a file on a tempfs volume like /dev/shm and them mmap() the same file (or portions of it) into different parts of the address space? This solution has been suggested to me by Linux kernel people as a way of solving a different problem (namely how to map the same memory twice, once r/w and once r/x).

Yes, on platforms that support it, redundantly mapping a single region of physical memory can be very useful. One popular use is to create circular arrays that wrap automatically in a larger contiguous address range.

“The best page size on consoles for compromising between performance and fragmentation is 64 KB”

That depends a lot on the game and allocation patterns. If you can get away with 1MB pages you avoid some hefty TLB miss penalties. That can be important, especially when you’re pushing for high performance, e.g. 60Hz games.

I agree that where you set the dial between processor and memory efficiency is subjective and title-dependent. I won’t claim to have analyzed a wide range of applications, but in our games the fragmentation benefit of 64 KB vs 1 MB pages is dramatic and the performance difference is so small as to be indistinguishable from noise. Because address translation is cached at multiple levels, my understanding is that the real performance cliff lies between 64 KB and 4 KB pages.

How about on the Xbox One and PS4? I can’t easily find the page sizes they use.