I recently wrote about dlmalloc and how it is a poor choice for a memory allocator for console games. As I explained in my previous article, dlmalloc has two major limitations. It manages a pool of address space composed of discrete regions called segments. It can easily add segments to grow its pool of address space, but it can’t easily remove segments to return address space to the OS. Additionally, it doesn’t distinguish between physical and virtual memory, which means that it can’t take advantage of virtual memory’s ability to combat fragmentation.

During the development of FEAR 3, to address these limitations, I implemented a new general-purpose allocator based on dlmalloc called dsmalloc. Dsmalloc makes two important improvements to dlmalloc.

The first improvement is in how dsmalloc treats segments. In dsmalloc, segments are always managed as completely distinct regions of memory. Dsmalloc will never coalesce adjacent segments. Because of this, dsmalloc tracks segments much more closely than dlmalloc. By overlaying a second intrusive data structure in the free bits of the boundary tag structure used to track individual allocations, dsmalloc can, in constant time and with no memory overhead, determine with every free operation when a segment is no longer occupied and can be returned to the system.

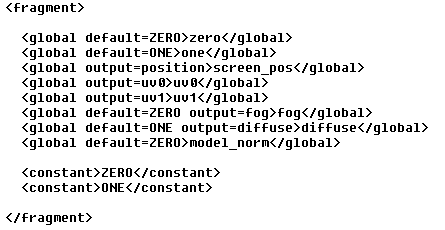

The second improvement is that dsmalloc recognizes the difference between reserved address space and allocated memory. Unlike dlmalloc, which uses two callbacks to allocate and free system memory, dsmalloc exposes four callbacks, two to reserve and release segment-sized regions of virtual address space, and two to commit and decommit page-sized subregions of segments.

Just as dsmalloc never leaves any unoccupied segments reserved, it also never leaves any unoccupied pages committed.

typedef void* (*ReserveSegmentFunc)(size_t size);

typedef void (*ReleaseSegmentFunc)(void* ptr, size_t size);

typedef int (*CommitPageFunc)(void* ptr, size_t size);

typedef void (*DecommitPageFunc)(void* ptr, size_t size);MemorySpace* CreateMemorySpace(

size_t initialCapacity,

ReserveSegmentFunc reserveSegmentFunc,

ReleaseSegmentFunc releaseSegmentFunc,

CommitPageFunc commitPageFunc,

DecommitPageFunc decommitPageFunc,

size_t pageSize,

size_t segmentGranularity,

size_t segmentThreshold );

These two extensions are implemented without any additional memory overhead compared to dlmalloc and with very little computational overhead. The added cost is a few percent in targeted tests, but in a real-world scenario the performance difference between dlmalloc and dsmalloc isn’t even measurable.

The great thing about these two changes to dlmalloc is that they enable a wide range of allocation strategies that otherwise wouldn’t be feasible.

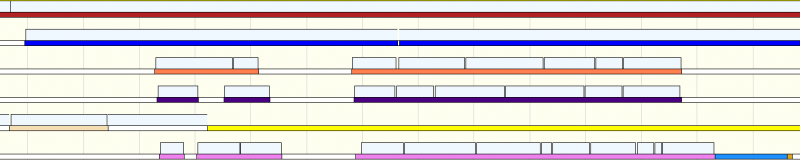

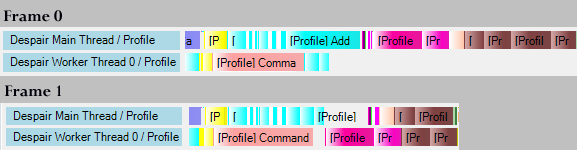

Individual systems within the Despair Engine are free to create separate dsmalloc instances (or regions) for their own use. Because dsmalloc instances are so aggressive about returning memory to the system, they incur minimal internal fragmentation costs and can coexist gracefully with other system allocators. Thread-safety is left to the users of dsmalloc, so individual systems can bypass thread synchronization costs entirely or at least use local locks to avoid contention with other allocators. Using separate dsmalloc instances also provides systems with easier tracking of memory allocations, tighter enforcement of budgets, and, if their allocation patterns exhibit temporal or spatial locality within their own systems, reduced external fragmentation.

For systems that don’t want to bother with using a custom allocator (which, frankly, most don’t), Despair Engine provide a common allocator which services request from the tradition global allocation functions like operator new, malloc, and XMemAlloc. This common allocator also utilizes dsmalloc instances under the hood.

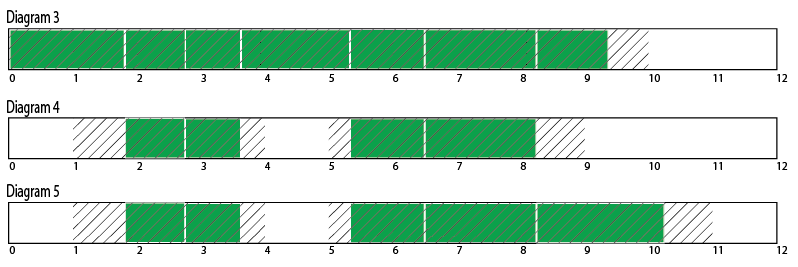

The common allocator creates one dsmalloc instance for allocations larger than 256 bytes and 20 dsmalloc instances covering allocations at every 8 byte size interval less than 64 bytes and every 16 byte interval between 64 and 256 bytes. The instance for large allocations uses a 32 megabyte segment size and 64 kilobyte pages whereas the small allocation instances use 64 kilobyte segments. Using page-sized segments for the small allocation regions is a minor optimization that removes the need for dsmalloc to track address reservation and memory commission separately for these regions.

Bucketing small allocations into so many discrete regions significantly reduces external fragmentation in our games, despite creating a modest increase in internal fragmentation. Since only allocations within a single region need to be synchronized, it also has a side benefit of greatly reducing contention between allocations from multiple threads.

It is worth noting that since the various small allocation regions are essentially used for fixed-size allocations, they could be more efficiently implemented as dedicated fixed-block allocators. We have such an implementation in the engine, but dsmalloc (like dlmalloc) already implements an internal small, fixed-block allocation optimization, so in practice it is more convenient to use dsmalloc instances for everything and almost as efficient.

One key benefit of using dsmalloc instances for small allocations instead of a pure fixed-block allocator is that it offers us more flexibility in how the small allocation regions are configured. At the time I was implementing this in FEAR 3, minimizing fragmentation was our top concern, but in the future we might choose to prioritize thread synchronization efficiency over memory consumption. Instead of routing allocations to regions based on their size, we could create just a few small allocation regions and cycle between them based on thread contention. The idea is to allow a thread to try to lock a region for allocation, but rather than waiting if the region is already in use by another thread, simply move on to another region and try again.

Dsmalloc is flexible enough to support both of these strategies efficiently or, in fact, any hybrid combination of the two.

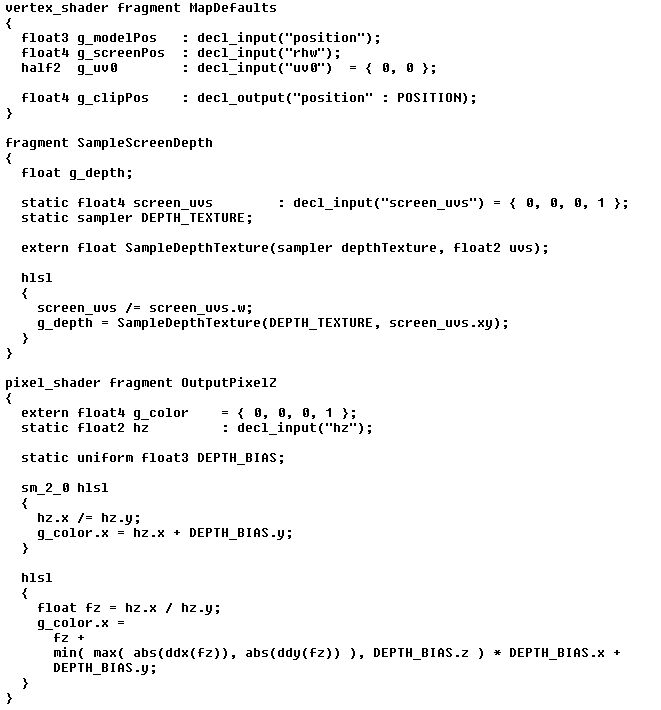

The dsmalloc callbacks are designed to be easily mappable to OS functions such as VirtualAlloc in Windows, but sometimes these functions are too expensive to be used directly. To improve performance, on some platforms the Despair general allocator utilizes a commit cache. The commit cache is a simple direct-mapped cache that sits between the dsmalloc commit callback and the OS. Dsmalloc already optimizes allocation order to maximize cache efficiency, and this benefits the commit cache as well. A 32 megabyte commit cache is probably overly generous, but it guarantees that OS-level calls don’t show up in our profiles even during content streaming transitions.

Having the cache implemented external to dsmalloc is also useful. When memory is extremely tight, anything that doesn’t utilize the general allocator could fail even though memory is still available in the commit cache. In those extreme cases the commit cache can be manually prompted to return pages to the system ensuring that OS-level allocations never fail because the memory they need is held in a cache.

There is one additional complication that plagues the consoles. On both the Xbox 360 and the Playstation 3, the GPU does not share the CPU’s memory mapping unit. On the Xbox 360 the GPU requires physically contiguous memory for all resources and on the PS3 the GPU has a separate MMU with only 1 megabyte page granularity. Since we use dsmalloc with 64 kilobyte pages to minimize fragmentation, this means we’re susceptible to physical memory fragmentation when it comes to resources allocated for the GPU. On the Xbox 360, unbeknownst to many developers, the OS copes with this automatically. When a physical allocation request can’t be satisfied due to physical memory fragmentation, the Xbox 360 operating system locks the entire address space and defragments physical memory pages (by memcpy’ing physical pages and remapping virtual addresses) to accommodate the request.

On the PS3 the OS doesn’t provide this service automatically, but the same basic need exists. 64 kilobyte pages must be locked and relocated to generate contiguous 1 megabyte pages appropriate for the GPU. Thankfully with a little bit of acrobatics it is possible to do just that without violating certification requirements.

Although the operation is every bit as expensive as it sounds, it proved necessary to keep FEAR 3 from crashing. FEAR 3 ran dangerously close to the limits of memory on the Playstation 3 and survived only by allowing flexible budgets for every kind of memory. GPU allocations in main memory varied by over 100% between streaming regions so not only did CPU addressable memory need to be defragmented for use by the GPU, GPU addressable memory had to be defragmented continually and returned to the CPU. The really expensive CPU defragmentation provided a safety net against crashes at all times, but thankfully it was only needed at major chapter transitions where a load screen was expected and a 100 ms hitch was infinitely preferable to an out and out crash.

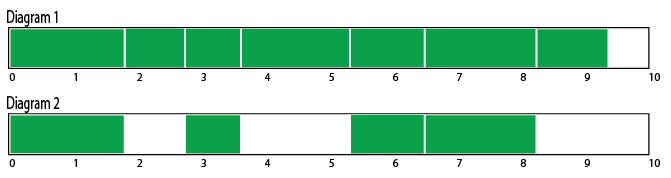

When I undertook to rewrite the Despair memory allocator near the end of FEAR 3, the overarching goal was to never fail to satisfy an allocation request due to prior memory usage patterns. If a level fit just barely into memory after a fresh boot, we wanted it to fit equally well in memory after a hundred hours of continuous play. While I can’t pretend that fragmentation doesn’t exist at all in the system I devised, I can say that it was so dramatically reduced that we were able to establish an upper bound on fragmentation and enforce budgets for our content that were reliable regardless of the order or duration in which the game was played.

The new Despair memory allocator based on dsmalloc ran the same content as the old memory allocator on all platforms with a slightly lower lower bound on memory, a much lower upper bound on memory, and it even managed a slightly lower run-time performance cost.